Video processing can be a computationally intensive task, and since computing power is often at a premium, the more we can speed up a video processing pipeline, the better. This is especially true for applications that require real-time processing, like processing a video stream from a webcam. While it’s important that the image processing portion of a video processing pipeline be streamlined, input/output (I/O) operations also tend to be a major bottleneck. One way to alleviate this is to split the computational load between multiple threads. It’s important to note that, in Python, there exists something called the global interpreter lock (GIL), which prevents multiple threads from accessing the same object. However, as the Python Wiki tells us:

…potentially blocking or long-running operations, such as I/O, image processing, and NumPy number crunching, happen outside the GIL. Therefore it is only in multithreaded programs that spend a lot of time inside the GIL … that the GIL becomes a bottleneck.

In other words, blocking I/O operations (which “block” the execution

of later code until the current code executes), like reading or displaying video

frames, are ripe for multithreaded execution. In this post, we’ll examine

the effect of putting calls to cv2.VideoCapture.read() and cv2.imshow() in

their own dedicated threads.

All the code used in this post can be found on Github.

Measuring changes in performance

First, we must define “performance” and how we intend to evaluate

it. In a single-threaded video processing application, we might have the main

thread execute the following tasks in an infinitely looping while loop: 1) get a

frame from the webcam or video file with cv2.VideoCapture.read(), 2) process

the frame as we need, and 3) display the processed frame on the screen with a

call to cv2.imshow(). By moving the reading and display operations to other

threads, each iteration of the while loop should take less time to execute.

Consequently, we’ll define our performance metric as the number of

iterations of the while loop in the main thread executed per second.

To measure iterations of the main while loop executing per second, we’ll

create a class and call it CountsPerSec,

which can be found on Github.

|

|

We import the datetime module to track the elapsed time. At the end of each

iteration of the while loop, we’ll call increment() to increment the

count. During each iteration, we’ll obtain the average iterations per

second for the video with a call to the countsPerSec() method.

Performance without multithreading

Before examining the impact of multithreading, let’s look at performance

without it. Create a file named thread_demo.py, or

grab it on Github and follow along.

|

|

We begin with some imports, including the CountsPerSec class we made above. We

haven’t covered the VideoGet and VideoShow classes yet, but these will

be used to execute the tasks of getting video frames and showing video frames,

respectively, in their own threads. The function putIterationsPerSec()

overlays text indicating the frequency of the main while loop, in iterations per

second, to a frame before displaying the frame. It accepts as arguments the

frame (a numpy array) and the iterations per second (a float), overlays the

value as text via cv2.putText(), and returns the modified frame.

Next, we’ll define a function, noThreading(), to get frames, compute and

overlay the iterations per second value on each frame, and display the frame.

|

|

The function takes the video source as its only argument. If given an integer,

source indicates that the video source is a webcam. 0 refers to the first

webcam, 1 would refer to a second connected webcam, and so on. If a string is

provided, it’s interpreted as the path to a video file. On lines

19-20, we create an OpenCV VideoCapture object to grab and decode frames

from the webcam or video file, as well as a CountsPerSec object to track the

main while loop’s performance. Line 22 begins the main while loop. On

line 23, we utilize the VideoCapture object’s read() method to get

and decode the next frame of the video stream; it returns a boolean, grabbed,

indicating whether or not the frame was successfully grabbed and decoded, as

well as the frame itself in the form of a numpy array, frame. On line 24,

we check if the frame was not successfully grabbed or if the user pressed the

“q” key to quit and exit the program. In either case, we halt

execution of the while loop with break. Barring either condition, we continue

by simultaneously obtaining and overlaying the current “speed” of

the loop (in iterations per second) in the lower-left corner of the frame on

line 27. Finally, the frame is displayed on the screen on line 28 with a

call to cv2.imshow(), and the iteration count is incremented on line 29.

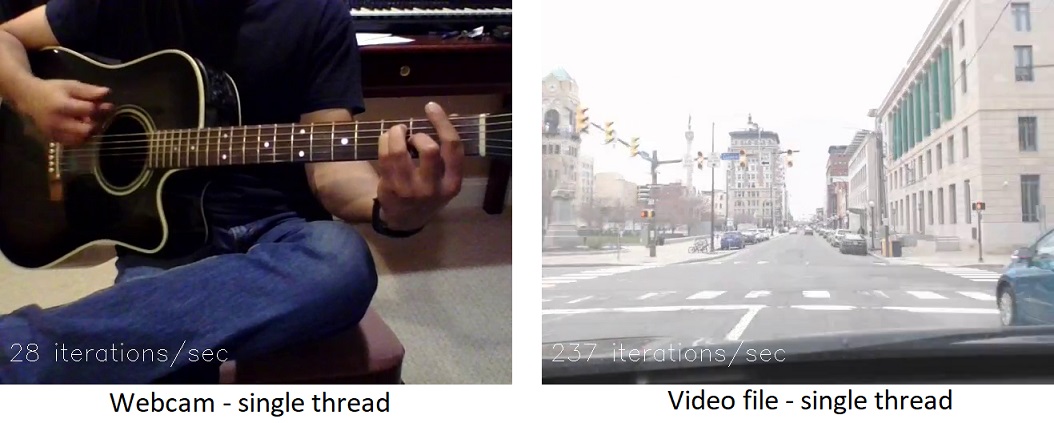

What do the results look like for both a webcam and a video file? These are the values I got on my hardware:

Reading from a webcam, the while loop executed about 28 iterations/second. Reading from an AVI file, about 240 iterations/second. These will serve as our baseline values.

A separate thread for getting video frames

What happens if we move the task of getting frames from the webcam or video file

into a separate thread? To do this, we’ll first define a class called

VideoGet in a file named VideoGet.py,

which can be found on Github.

|

|

We import the threading module, which will allow us to spawn new threads. In

the class __init__() method, we initialize an OpenCV VideoCapture object and

read the first frame. We also create an attribute called stopped to act as a

flag, indicating that the thread should stop grabbing new frames.

|

|

The start() method creates and starts the thread on line 16. The thread

executes the get() function, defined on line 19. This function

continuously runs a while loop that reads a frame from the video stream and

stores it in the class instance’s frame attribute, as long as the

stopped flag isn’t set. If a frame is not successfully read (which might

happen if the webcam is disconnected or the end of the video file is reached),

the stopped flag is set True by calling the stop() function, defined on

line 26.

We’re now ready to implement this class. Returning to the file

thread_demo.py, we define a function threadVideoGet(), which

will use the VideoGet object above to read video frames in a separate thread

while the main thread displays the frames.

|

|

The function is pretty similar to the noThreading() function discussed in the

previous section, except we initialize the VideoGet object and start the

second thread on line 37. On lines 41-43 of the main while loop, we

check to see if the user has pressed the “q” key or if the

VideoGet object’s stopped attribute has been set True, in which case

we halt the while loop. Otherwise, the loop gets the frame currently stored in

the VideoGet object on line 45, then proceeds to process and display it as

in the noThreading() function.

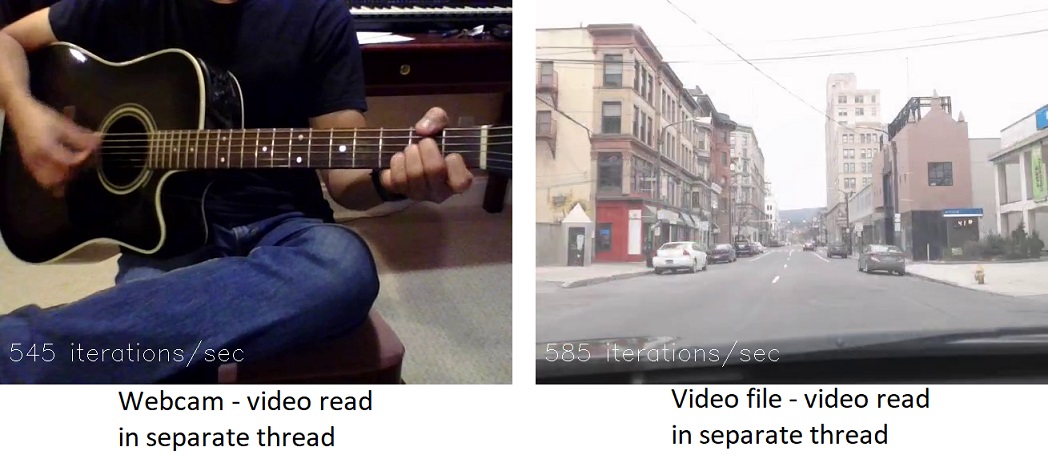

How does the function perform on a webcam video stream and on a video file?

Quite the difference compared to the single-thread case! With the frame-getting task in a separate thread, the while loop executed 545 iterations/second for a webcam and 585 iterations/second for a video file. At this point, it’s important to note that these values do not correspond to framerate or FPS. The video FPS is largely limited by the camera hardware and/or the speed with which each frame can be grabbed and decoded. The iterations per second simply show that the main while loop is able to execute more quickly when some of the video I/O is off-loaded to another thread. It demonstrates that the main thread can do more processing when it isn’t also responsible for reading frames.

A separate thread for showing video frames

To move the task of displaying video frames to a separate thread, we follow a

procedure similar to the last section and define a class called VideoShow in a

file named VideoShow.py, which, as before,

can be found on Github. The class definition begins just like the VideoGet

class:

|

|

This time, the new thread calls the show() method, defined on line 17.

|

|

Note that checking for user input, on line 20, is achieved in the separate

thread instead of the main thread, since the OpenCV waitKey() function

doesn’t necessarily play well in multithreaded applications and I found it

didn’t work properly when placed in the main thread. Once again, returning

to

thread_demo.py, we define a function called

threadVideoShow().

|

|

As before, this function is similar to the noThreading() function, except we

initialize a VideoShow object, which I’ve named video_shower

(that’s “shower” as in “something that shows,” not

“shower” as in “water and shampoo”) and start the new

thread on line 58. Line 63 checks indirectly if the user has pressed

“q” to quit the program, since the VideoShow object is actually

checking for user input and setting its stopped attribute to True in the event

that the user presses “q”. Line 68 sets the VideoShow

object’s frame attribute to the current frame.

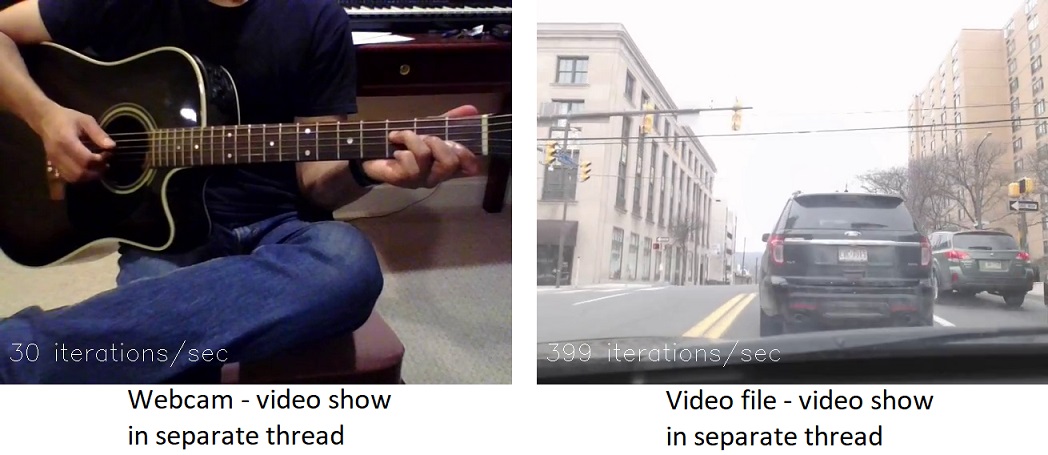

And the result?

This is interesting. The webcam performs at 30 iterations/second, only slightly faster than the 28 obtained in the case of a single thread. However, the video file performs at ~400 iterations/second—faster than its single-thread counterpart (240 iterations/second) but slower than the video file with video reading in a separate thread (585 iterations/second). This suggests that there’s a fundamental difference between reading from a camera stream and from a file, and that the primary bottleneck for a camera stream is reading and decoding video frames.

Separate threads for both getting and showing video frames

Finally, we’ll implement a function named threadBoth() in

thread_demo.py that creates a thread for getting video frames via the

VideoGet class and another thread for displaying frames via the VideoShow

class, with the main thread existing to process and pass frames between the two

objects.

|

|

This function is a mixture of the threadVideoGet() and threadVideoShow()

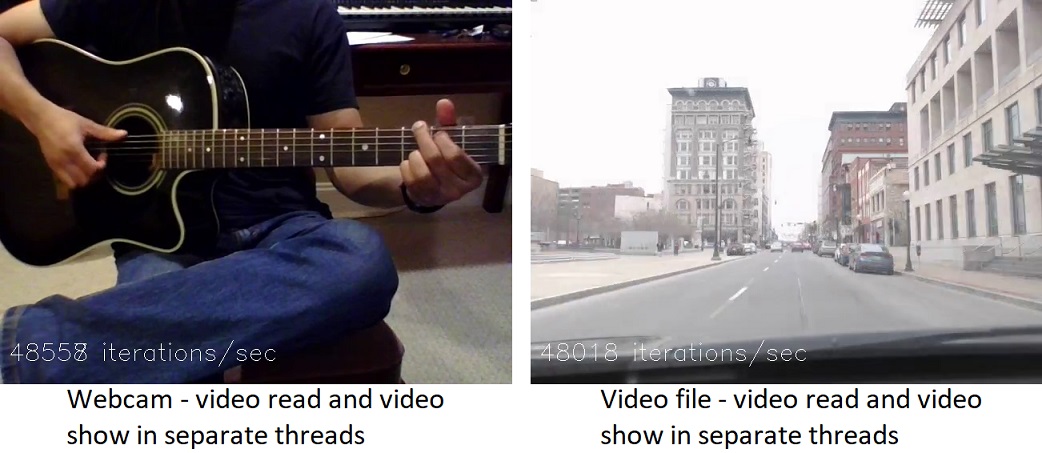

functions, which turns out to have a very interesting result:

This seems to be at odds with the previous conclusion, which suggested that reading frames was the primary bottleneck for the webcam. For whatever reason, the combination of putting both frame-reading and frame-display in dedicated threads bumps the performance in both cases up to a whopping ~48000 iterations/second. Not being as well-versed in multithreading as I’d like to be, I can’t quite explain this result. Regardless, it appears fairly clear that using multithreading for video I/O can free up considerable resources for performing other image processing tasks.